Nvidia’s recent Computex keynote painted a compelling picture of a future deeply integrated with AI, from telecommunications and quantum computing to advanced robotics and digital twins. The overarching theme revolved around building a software-defined world, powered by incredibly fast and scalable computing.

Building 6G on AI: The Future of Telecommunications

Nvidia is making significant strides in telecommunications, focusing on a fully accelerated Radio Access Network (RAN) stack that promises incredible performance. After six years of refinement, their RAN stack is now on par with state-of-the-art ASICs in terms of data rate per megawatt or data rate per watt. This achievement paves the way for layering AI on top of 5G and 6G networks.

Nvidia is collaborating with major players in the industry:

- Trials: SoftBank, T-Mobile, Indosat, Vodafone

- Full Stack Development: Nokia, Samsung, Kyocera

- Systems: Fujitsu, Cisco

Quantum Computing and CUDA Q

Nvidia is also venturing into quantum computing with their CUDA Q platform, a quantum-classical or quantum GPU computing platform. While quantum computing is still in the “noisy intermediate-scale quantum” (NISQ) state, many applications are already being explored. GPUs are envisioned to play a crucial role in pre-processing, post-processing, error correction, and control in future quantum systems. The prediction is that “all supercomputers will have quantum accelerators, all have quantum QPUs connected to it.”

Grace Blackwell NVL72: Scaling Up for Thinking AI

A major highlight of the keynote was the Grace Blackwell system, designed to handle “thinking AI” or “reasoning AI” by enabling “inference time scaling.” This new system addresses the immense computational demands of generating complex thoughts and iterating before producing an answer.

Jensen Huang emphasized the difficulty of “scaling up” – transforming a computer into a giant computer – compared to “scaling out” (connecting many computers). Grace Blackwell achieves this by pushing beyond the limits of semiconductor physics.

Grace Blackwell Production & Upgrades:

- Full Production: Blackwell systems based on HGX have been in full production since late last year and available since February. Grace Blackwell systems are now coming online daily.

- Availability: Already being used by many CSPs and available in CoreWeave for several weeks.

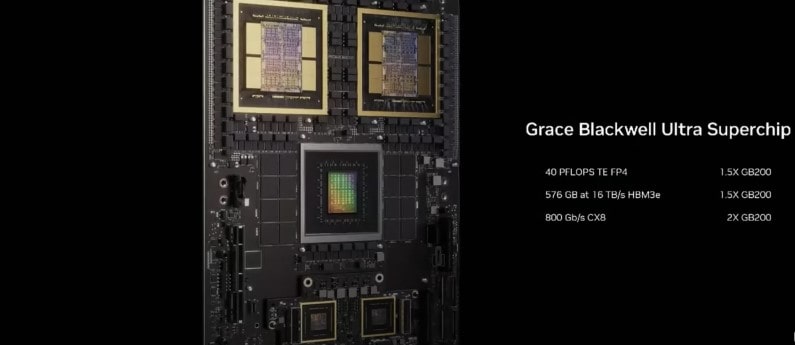

- Q3 Upgrade: In Q3, Nvidia will upgrade to Grace Blackwell GB300. This upgrade maintains the same architecture, physical footprint, and electrical/mechanicals, but features upgraded chips.

- Performance Improvements (GB300):

- 1.5x more inference performance

- 1.5x more HBM memory

- 2x more networking

The development of Blackwell and NVLink for this generation has made it possible to create incredibly large and powerful systems, with a vast ecosystem of 150 companies contributing over three years.

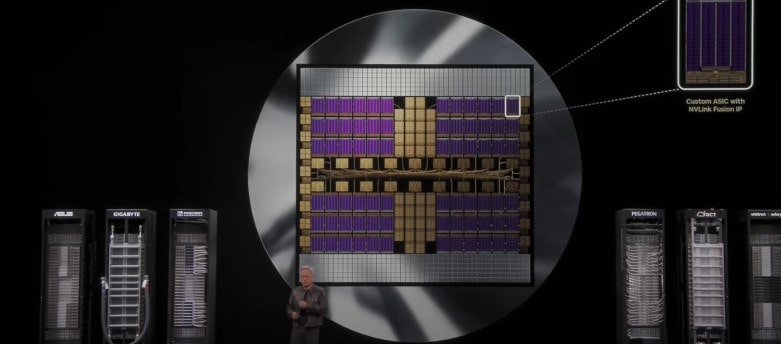

NVLink Fusion: Semi-Custom AI Infrastructure

Nvidia is democratizing AI infrastructure development with the announcement of NVLink Fusion. This allows for the creation of semi-custom AI infrastructure, moving beyond just semi-custom chips. The goal is to enable diverse AI infrastructures, whether they prioritize CPUs, Nvidia GPUs, or semi-custom ASICs.

NVLink Fusion addresses the challenge of scaling up these complex semi-custom systems.

How NVLink Fusion Works:

- Nvidia Platform: A 100% Nvidia system includes Nvidia CPU, Nvidia GPU, NVLink switches, and Spectrum-X or Infiniband networking.

- Custom ASIC Integration: Partners can integrate their custom TPUs, ASICs, or other accelerators using an NVLink chiplet, which is a switch that abuts directly to their chip. IP will be available for integration.

- Custom CPU Integration: Users can integrate their own CPUs by using Nvidia’s NVLink chip-to-chip interface, connecting their ASIC with NVLink chiplets directly to Blackwell and future Ruben chips.

DGX Spark AI Computer & DGX Station

Nvidia is making AI supercomputing more accessible with new form factors:

- DGX Spark: Designed for “AI native developers” – students, researchers, and developers who want a personal, always-on AI cloud for prototyping and early development.

- Performance: One petaFLOPS and 128 gigabytes of memory.

- Comparison: Remarkably, its performance is quite similar to the 2016 DGX1, which was a 300lb machine, showcasing a decade of incredible achievement in miniaturization and efficiency.

- Availability: Expected in a few weeks, with partners like Dell, HP, Asus, MSI, Gigabyte, and Lenovo offering different versions.

- Accessibility: “Everybody can have one for Christmas.”

- DGX Station: Billed as a “personal DGX supercomputer,” this desk-side unit offers the most performance achievable from a wall socket.

- Power Limit: The power consumption is so high that running a microwave simultaneously might be the limit for a kitchen outlet.

- Scalability: The programming model is identical to the giant data center systems, allowing a one trillion parameter AI model to run wonderfully.

- Availability: From Dell, HP, Asus, Gigabyte, MSI, Lenovo, Box, and Lambda.

Nvidia RTX Pro Server: Enterprise AI Agents

The new RTX Pro Enterprise and Omniverse server is a versatile solution for enterprise AI. It supports traditional x86 environments, hypervisors, and Kubernetes, allowing seamless integration with existing IT infrastructure.

This server is specifically designed for enterprise AI agents, which can be in text, graphics, or video form. It can handle diverse workloads and modalities, running “every single model that we know of in the world” and “every application that we know of.”

Nvidia AI Robotics & Omniverse Digital Twin

Robotics is a key focus, with Nvidia emphasizing the need for AI to teach AI, particularly through synthetic data generation and reinforcement learning in simulation.

Humanoid Robotics:

- Importance: Addresses the severe global labor shortage.

- Versatility: Humanoid robots can be deployed almost anywhere (brownfield environments) and fit into the world engineered for humans.

- Scale: Likely the next multi-trillion dollar industry due to its potential for technology scale, fostering rapid innovation and enormous consumption of computing and data centers.

- Computational Needs: Requires three types of computers: one for AI learning, a simulation engine for virtual training, and a deployment system.

Omniverse Digital Twin for Factories: As robots are integrated into factories, the factories themselves are becoming robotic and software-defined. Omniverse is crucial for teaching robots to operate as a team within these robotic factories.

Key Takeaway from Digital Twins:

- Nested Digital Twins: Omniverse will enable digital twins of robots, equipment, and entire factories, creating nested digital twins.

- Real-World Applications: Nvidia showcased digital twins of manufacturing lines from Delta, Wiw, Pegatron, Foxconn, Gigabyte, Quanta, Wistron, and even TSMC’s next fab.

- Global Investment: With $5 trillion of new plants being planned globally over the next three years due to re-industrialization, digital twins are vital for cost-effective and timely construction, and for preparing for a robotic future.

- Nvidia’s Own Factory: Nvidia is even building a digital twin of their own AI factory.

Conclusion: A New Industry

Nvidia is not just creating the next generation of IT; it is “creating a whole new industry”. This new industry presents “giant opportunities ahead,” focusing on building AI factories, agents for enterprises, and robots, all within a unified architecture. The keynote concluded with a strong emphasis on partnership in building this future.